#013 - From Batch ETL to Real-Time: A 4-Step Risk Mitigation Framework for Data Leaders

Don't Let Your Real-Time Data Project Fail Silently. Learn the Key to Successful ETL Streaming Migration.

Hey there,

Welcome to this week's edition, where we tackle one of the most challenging transitions in modern data architecture: migrating from batch ETL to real-time streaming.

Introduction

In today’s fast-paced business world, traditional batch ETL processes often lead to data delays and resource spikes, hindering agility. While moving to streaming offers real-time responsiveness, it also presents hidden challenges. Embracing this shift can elevate your data strategy and empower your organization like never before!

Here is what we are going to discuss today:

The fundamental architectural differences between batch and streaming

Three major risk categories you must address for successful migration

A practical four-phase framework for risk mitigation during transition

Let’s dive in.

The Fundamental Architectural Shift

Quick Summary: Batch ETL works with defined data sets, while streaming ETL tackles an endless flow of data in real time.

Transitioning from batch ETL to streaming ETL is an exciting opportunity for your organization to enhance its data management! Recognizing these differences is vital for a smooth and successful shift.

Batch ETL deals with specific datasets that have defined start and end points, processing data at regular intervals. However, issues with data quality can sometimes disrupt these batches.

On the other hand, streaming architectures handle real-time data seamlessly! They require continuous resource allocation and process each record as it arrives, presenting unique challenges in error management.

This transition influences infrastructure and monitoring and calls for fresh approaches to data processing. Embracing this innovative shift is crucial for unlocking new possibilities and driving successful outcomes!

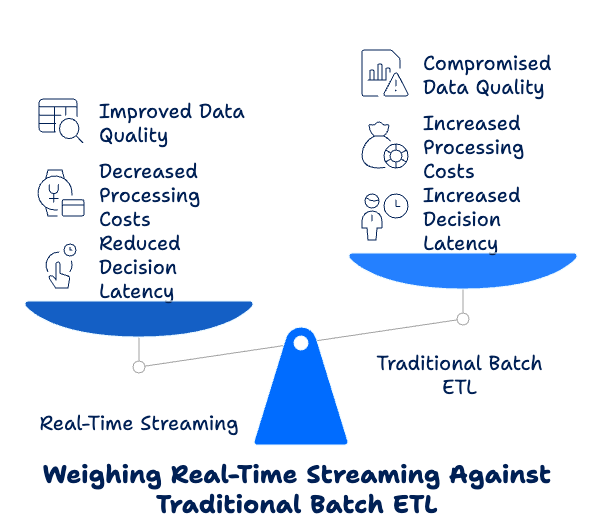

The Business Case for Real-Time Streaming

Before diving into implementation, let's clarify why organizations are making this shift. Real-time streaming offers several compelling advantages over traditional batch ETL:

Reduced decision latency: Insights are available in seconds instead of hours or days

Decreased processing costs: Smaller, continuous workloads instead of resource-intensive batch jobs

Improved data quality: Issues are identified and addressed at the source rather than discovered during batch failures

Enhanced business agility: Ability to respond to events as they happen, not after the fact

Architectural simplification: Eventually eliminates complex scheduling dependencies

However, these benefits come with significant implementation challenges that must be addressed through a structured migration approach.

The Three Major Risk Categories

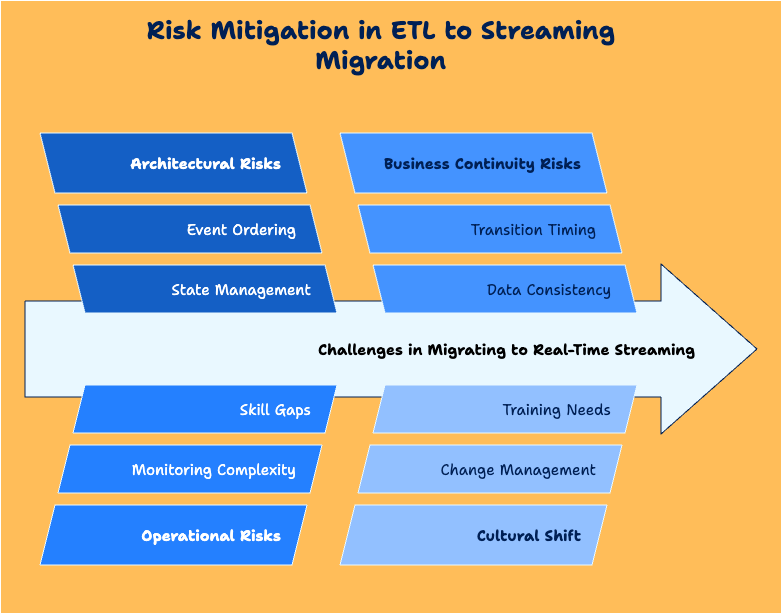

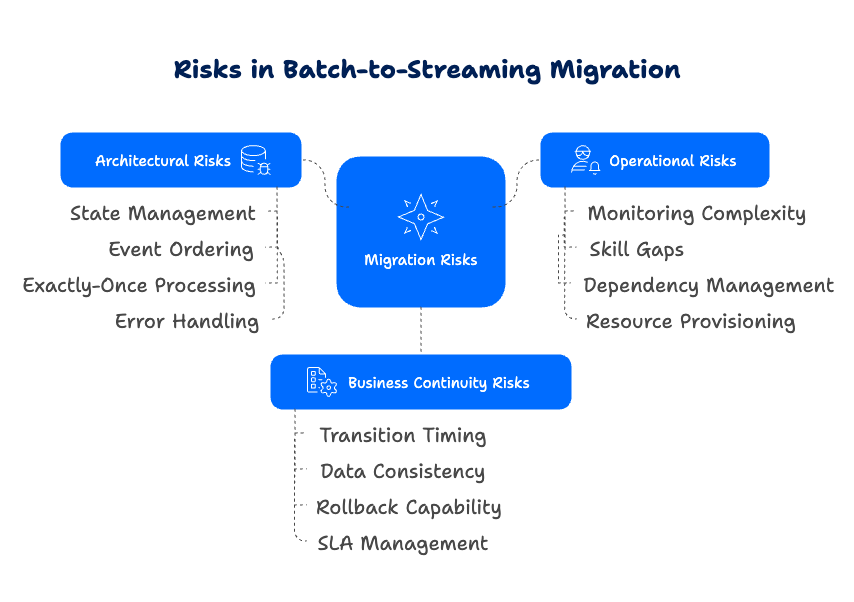

Successful batch-to-streaming migrations must address three primary risk categories:

1. Architectural Risks

Batch and streaming architectures follow fundamentally different paradigms. Batch processing is designed around static datasets with clear beginnings and endings, while streaming deals with unbounded, continuous data flows. This paradigm shift creates several architectural challenges:

State management: Streaming systems must maintain state across processing windows

Event ordering: Ensuring proper sequencing when messages arrive out of order

Exactly-once processing: Preventing duplicate processing while ensuring no events are missed

Error handling: Managing failures without stopping the entire pipeline

2. Operational Risks

The operational model for real-time systems differs significantly from batch operations:

Monitoring complexity: Continuous processes require different monitoring approaches

Skill gaps: Teams need new skills to support streaming architectures

Dependency management: Upstream and downstream systems may not be ready for real-time

Resource provisioning: Consistent performance requires different resource allocation strategies

3. Business Continuity Risks

Perhaps most critically, maintaining business continuity during migration is essential:

Transition timing: Determining when to switch from batch to streaming

Data consistency: Ensuring data integrity across both systems during transition

Rollback capability: Maintaining the ability to revert if issues arise

SLA management: Meeting existing service level agreements during migration

A Four-Phase Risk Mitigation Framework

To address these risks systematically, I recommend a four-phase approach that has proven successful across multiple enterprise migrations:

Phase 1: Assessment and Capability Building

Key Activities:

Catalog existing batch ETL processes and their business criticality

Identify data flows most suitable for initial streaming implementation

Assess team skills and develop training plans

Establish success metrics for the migration

Risk Mitigation Techniques:

Conduct a pilot on non-critical data flows: Start with lower-risk data streams to build expertise

Build a streaming competency center: Develop internal expertise before widespread adoption

Implement streaming design patterns training: Ensure teams understand fundamental streaming concepts

Deploy sandbox environments: Provide safe spaces for teams to experiment with streaming technologies

This preparation phase is often rushed or skipped entirely, but it's essential for setting the foundation for success. Industry research consistently shows that organizations that invest in proper capability building before implementation avoid significant rework and project delays later in the migration journey.

Phase 2: Parallel Implementation

Key Activities:

Design streaming pipelines alongside existing batch processes

Implement real-time data capture mechanisms at sources

Develop reconciliation frameworks to compare batch and streaming outputs

Create fallback mechanisms to batch processing

Risk Mitigation Techniques:

Implement change data capture (CDC): Use CDC patterns to capture source changes without modifying existing systems

Develop dual-write mechanisms: Write to both systems during transition to enable comparison

Establish automated reconciliation: Build an automated comparison between batch and streaming outputs

Create circuit breakers: Design automatic fallbacks to batch processing when streaming issues occur

This parallel approach provides safety nets that maintain business continuity. Studies of successful streaming implementations show that this method consistently helps teams identify edge cases and boundary conditions that wouldn't have been apparent until they caused production issues.

Phase 3: Incremental Transition

Key Activities:

Gradually migrate consumers from batch to streaming outputs

Implement monitoring specific to streaming processes

Adapt data governance practices to real-time paradigm

Establish performance baselines and optimization targets

Risk Mitigation Techniques:

Implement feature flags: Allow granular control over which data elements use streaming

Create shadow consumers: Test streaming consumption without impacting production systems

Deploy comprehensive monitoring: Monitor latency, throughput, and error rates with alerting

Establish recovery mechanisms: Create procedures for point-in-time recovery if issues occur

The incremental approach allows you to build confidence while limiting exposure. According to case studies from successful migrations, this strategy works effectively when teams transition one data element at a time, allowing them to roll back specific components quickly without disrupting the entire system.

Phase 4: Optimization and Scale

Key Activities:

Optimize streaming pipelines for performance and cost efficiency

Decommission redundant batch processes

Expand streaming architecture to additional data domains

Implement advanced streaming patterns (e.g., stateful processing, complex event processing)

Risk Mitigation Techniques:

Implement performance benchmarking: Continuously measure against established baselines

Maintain batch capability selectively: Keep batch capabilities for disaster recovery

Deploy chaos engineering practices: Proactively test failure scenarios

Establish streaming governance: Develop standards and best practices for future implementations

Even in this phase, maintain the ability to revert to batch for disaster recovery. Research on enterprise streaming implementations indicates that regular "resilience testing" helps organizations identify potential failure points before they impact production systems.

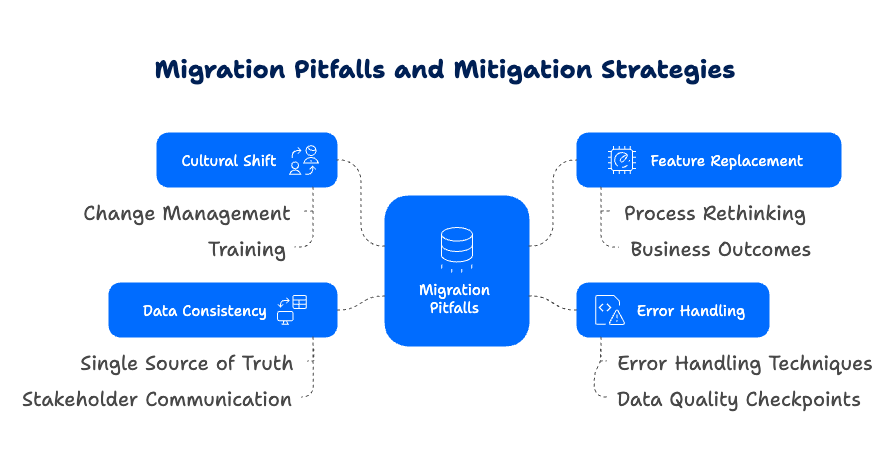

Common Pitfalls and How to Avoid Them

Research on streaming migration projects has identified several recurring challenges that organizations frequently encounter:

Pitfall 1: Underestimating the Cultural Shift

Streaming requires a fundamentally different mental model for data processing. Studies show that organizations focusing exclusively on technology without addressing the cultural shift often struggle with adoption.

Mitigation Strategy: Invest in change management and training focusing on real-time thinking. Develop new operational playbooks that reflect the continuous nature of streaming systems.

Pitfall 2: Direct Feature-for-Feature Replacement

Attempting to replicate existing batch processing features exactly in streaming often leads to overcomplicated solutions.

Mitigation Strategy: Rethink processes from first principles rather than directly translating batch logic. Ask, "What business outcome are we trying to achieve?" rather than, "How do we do this same process in streaming?"

Pitfall 3: Inadequate Error Handling

In batch systems, errors often cause the entire job to fail, making them visible. In streaming, errors can be more subtle, affecting only certain events or creating silent data quality issues.

Mitigation Strategy: Implement comprehensive error handling with dead letter queues, detailed logging, and automated alerting. Create data quality checkpoints that verify critical business rules are maintained.

Pitfall 4: Neglecting Data Consistency During Transition

During parallel operation, data inconsistencies between batch and streaming outputs can erode business confidence in the migration. Research indicates this is a primary reason why streaming projects stall before completion.

Mitigation Strategy: Implement a "single source of truth" approach where either batch or streaming is authoritative during transition. Clearly communicate to stakeholders which system is definitive and which data elements are needed.

Conclusion

Migrating from batch ETL to real-time streaming is a transformative journey beyond technology. Organizations can navigate this complex transition by applying a structured risk mitigation framework while maintaining business continuity.

The most successful migrations share common characteristics: they build capabilities before attempting full implementation, use parallel processing to validate outcomes, transition incrementally, and optimize continuously as they scale. By following these principles, organizations can unlock the full potential of real-time data while minimizing the inherent risks of such a fundamental architectural shift.

Remember that this migration is a technical project and a strategic business transformation. Organizations that approach it with appropriate risk management will gain significant competitive advantages through enhanced agility, reduced costs, and the ability to deliver insights when they create maximum value—in real time.

That’s it for this week. If you found this helpful, leave a comment to let me know. ✊

About the Author

With 15+ years of experience implementing data integration solutions across financial services, telecommunications, retail, and government sectors, I've helped dozens of organizations implement robust ETL processing. My approach emphasizes pragmatic implementations that deliver business value while effectively managing risk.