#24 - Is Your ETL Sabotaging Your AI? (The Real Reason AI Projects Fail)

Discover the Modular Data Strategy That Separates AI Winners from Followers & Ensures Success

Read time: 3 minutes.

Hi Data Modernisers,

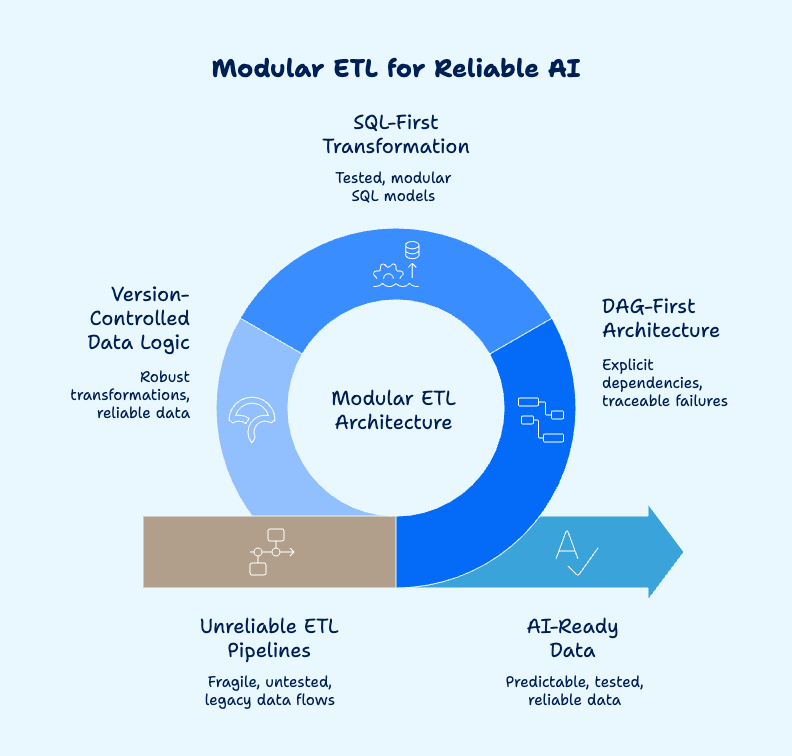

TLDR: Your AI models keep failing in production because your ETL pipeline is a mess of legacy scripts and cron jobs. Here's how modular dbt + Airflow architecture creates the reliable, testable data flows that AI actually needs to work.

There is a typical pattern: companies build brilliant AI models that crash and burn the moment they enter production, and it's always the same culprit.

Not the algorithms. Not the data scientists. It's the ETL monster pieced together from years of "quick fixes" and cron jobs that nobody wants to handle. Teams spend 6 months perfecting recommendation engines, only to feed them with data processed by scripts that were written in 2014 and haven't been touched since.

Your AI is only as intelligent as your data pipeline is reliable. Here's what we're tackling:

Why modular ETL architecture is the difference between AI that works and AI that dies

The specific DBT + Airflow patterns that actually scale for ML workloads

Real examples of teams who rebuilt their pipelines and unlocked AI capabilities they didn't know were possible

Let me show you what modern data architecture actually looks like.

Sponsored By: BigDataDig

Stop letting spaghetti ETL kill your AI dreams.

We specialize in rebuilding chaotic data pipelines into modular, AI-ready architectures using dbt + Airflow. No more mysterious cron jobs that break when someone sneezes. No more ML models trained on stale data because your ETL takes 6 hours to run. We build pipelines with explicit dependencies, built-in testing, and real-time capabilities that your AI systems can actually rely on.

Ready to see what happens when your AI gets fresh, reliable data? Book a free pipeline assessment to see exactly how we'd rebuild your ETL for AI workloads.

3 Modular ETL Patterns That Turn Unreliable Data Chaos Into AI-Ready Intelligence

Your cron jobs might be "working" for monthly reports, but they're killing your AI initiatives.

AI systems need predictable, tested, reliable data flows, not legacy scripts held together with duct tape and prayer. The shift from scripts to structure allows teams to scale without chaos, and that's exactly what your AI workloads demand.

Pattern #1: DAG-First Architecture That Makes Dependencies Explicit

Stop playing guessing games with your data flow.

Traditional ETL scripts evolve organically, one cron job at a time. Over time, they become:

Tightly coupled without clear dependencies

Undocumented and impossible to troubleshoot

Fragile, one change breaks everything downstream

I have seen fintech companies with legacy SQL scripts processing user transactions hourly, without dependency tracking, until they attempted to feed that data into real-time fraud detection models.

The solution: Airflow DAGs, where each task is explicitly defined and scheduled with clear dependencies.

When your fraud detection model needs customer transaction patterns, it knows exactly which upstream tasks must be completed first:

No more mystery failures

No more "it worked yesterday" debugging sessions

Dependencies become visible, failures become traceable

Your AI models get predictable data delivery because every transformation step is mapped, monitored, and measurable.

Pattern #2: SQL-First Transformation Models That Actually Test Themselves

Replace hundreds of undocumented transformation queries with tested, modular models.

Unlike traditional ETL tools, dbt embraces SQL as the core modeling language and Git as the source of truth. Each model is a SQL file with explicit dependencies defined in ref() functions.

I've seen companies replace hundreds of undocumented SQL queries with tested, modular dbt models, and suddenly, their customer lifetime value predictions became reliable.

Here's what changes:

dbt runEnsures transformations are deterministicdbt testvalidates logic at every deploydbt docsgenerates a searchable lineage automatically

Your AI models get consistent, tested data instead of whatever happened to run last night.

The modular approach means that when business rules change (and they always do):

You update one model, and everything downstream adjusts automatically

Your recommendation engine doesn't break because someone changed how customer segments are calculated

Changes are tracked, reviewed, and tested like code.

Pattern #3: Version-Controlled Data Logic That Deploys Like Code

Approach your data transformations with the same diligence as your AI model code.

Teams can define pipelines in Python, version them using Git, containerize them with Docker, and deploy them via continuous integration and continuous deployment (CI/CD). This shift from scripts to structure is what separates AI systems that scale from those that collapse under their own complexity.

The architecture:

Git workflows with automated testing for DAG parseability and dbt compilation on every pull request

CI/CD integration that enables versioning, automated testing, and deployment without pain

Infrastructure as code that makes your entire data pipeline reproducible

When your data team pushes changes, they get the same code review, testing, and deployment process as your ML engineers. Your AI models get reliable data because the transformations feeding them are as robust as the models themselves.

That's it.

Here's what you learned today:

Modular ETL architecture separates AI winners from followers by making data flows predictable and testable

DAG-first design with explicit dependencies eliminates the mysterious failures that kill AI initiatives in production

Version-controlled transformation logic ensures your AI models receive consistent, reliable data that remains intact with business changes.

Stop accepting "it worked in development" when your AI needs production-grade reliability. Every undefined dependency in your ETL is a potential point of failure for your AI systems.

PS...If you're enjoying this newsletter, please forward it to the data engineer who's still debugging cron jobs at 2 AM. They deserve better.

And whenever you are ready, there are 3 ways I can help you:

Free ETL Architecture Audit - 60-minute deep-dive where we map your current data flows and identify exactly where chaos is killing your AI initiatives

Modular Pipeline Migration - Complete rebuild from spaghetti scripts to dbt + Airflow architecture that your AI systems can actually depend on

AI-Ready Data Platform - Full implementation of version-controlled, tested, modular ETL with real-time capabilities designed for production AI workloads

That’s it for this week. If you found this helpful, leave a comment to let me know ✊

About the Author

Khurram, founder of BigDataDig and a former Teradata Global Data Consultant, brings over 15 years of deep expertise in data integration and robust ETL processing. Leveraging this extensive background, he now specializes in helping organizations in the financial services, telecommunications, retail, and government sectors implement cutting-edge, AI-ready data solutions. His methodology prioritizes pragmatic, value-driven implementations that effectively manage risk while ensuring that data is meticulously prepared and optimized for AI and advanced analytics.