#16 - 5 Key Lakehouse Choices for 2025: Powering Your Modern Data Architecture

The data lakehouse goldmine is here—but which tools actually deliver?

Hello Data Modernizers,

Welcome to this week's edition. Choosing the right components for your data lakehouse isn't just about picking features; it's about laying the groundwork for your entire data strategy through 2025 and beyond. While the market buzzes with countless options promising transformation, only a few truly serve as the foundational building blocks for a robust system.

In this edition, we'll explore:

The 5 foundational lakehouse tools forming the core of resilient and scalable 2025 data platforms.

Key architectural advantages and the long-term value proposition these tools offer over legacy systems.

Essential integration patterns showing how these foundational tools work together to power your data architecture effectively.

Let’s dive in.

5 Essential Lakehouse Tools For Medium Enterprises, Even if Your IT Budget Is Shrinking

The right combination of tools differentiates lakehouse success from an expensive failed experiment. Here are the five categories you absolutely need to cover:

1. Table Format Engine: Delta Lake, Iceberg, or Hudi

The foundation of any successful lakehouse is a reliable table format that brings ACID transactions to your data lake.

Delta Lake: Mature ecosystem, strongest Databricks integration, excellent time travel features

Apache Iceberg: Growing momentum, vendor-neutral, strong Snowflake and AWS support

Apache Hudi: Best for record-level updates and real-time ingestion scenarios

Why it matters: The proper table format eliminates multiple redundant data copies while ensuring data reliability and governance capabilities.

2. Query Engine: Trino, Athena, or BigQuery

Your choice of query engine determines how efficiently you can analyze data across your lakehouse architecture.

Trino (formerly PrestoSQL): Open-source, maximum control, best for technical teams

AWS Athena: Serverless simplicity, pay-per-query pricing, seamless S3 integration

Google BigQuery: Enterprise-grade performance, robust security, excellent for large datasets

Why it matters: The right query engine lets you maintain warehouse-like performance while eliminating the traditional warehouse cost model.

3. Data Catalog: AWS Glue, Databricks Unity, or Collibra

Without proper metadata management, your lakehouse becomes an unnavigable swamp.

AWS Glue Data Catalog: Budget-friendly, AWS-native, suitable for smaller organizations

Databricks Unity Catalog: Rich governance features, excellent for sensitive data environments

Collibra: Enterprise-grade lineage tracking, regulatory compliance, comprehensive governance

Why it matters: Proper cataloging simplifies compliance reporting by automatically maintaining data lineage and ownership.

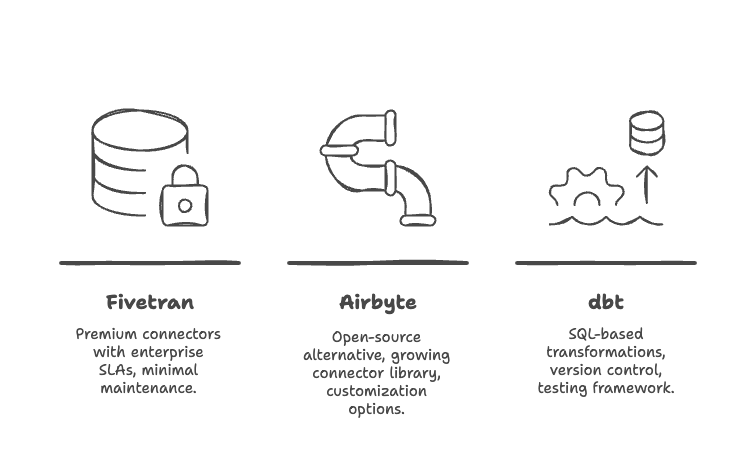

4. ETL/ELT: Fivetran, Airbyte, or dbt

You need reliable data integration and transformation capabilities even in a lakehouse architecture.

Fivetran: Premium managed connectors, enterprise SLAs, minimal maintenance

Airbyte: Open-source alternative, growing connector library, more customization

dbt: SQL-based transformations, version control, built-in testing framework

Why it matters: Modern ETL/ELT tools dramatically reduce the engineering effort required to keep your lakehouse fresh and reliable.

5. Orchestration: Airflow, Dagster, or Prefect

The final piece is reliable workflow orchestration to keep your data fresh and transformations running smoothly.

Apache Airflow: Most widely used, extensive provider ecosystem, mature community

Dagster: Data-aware pipelines, better observability, simpler debugging

Prefect: Developer-friendly, lower overhead, excellent for smaller teams

Why it matters: Reliable orchestration ensures your lakehouse stays current without requiring constant monitoring and intervention.

That's it.

Here's what you learned today:

Foundation matters: Table format engines (Delta Lake, Iceberg, Hudi) provide the essential foundation for any successful lakehouse

Query performance is key: Modern engines like Trino, Athena, and BigQuery deliver warehouse-like capabilities at a fraction of the cost.

Don't forget governance: Proper data catalog, ETL/ELT, and orchestration tools complete the lakehouse ecosystem for enterprise-grade operations.

The tool landscape has never been more favorable for medium-sized businesses seeking enterprise-level analytics capabilities. Each component in this stack can be implemented incrementally, allowing you to modernize at your own pace while seeing immediate ROI.

That’s it for this week. If you found this helpful, leave a comment to let me know ✊

About the Author

With 15+ years of experience implementing data integration solutions across financial services, telecommunications, retail, and government sectors, I've helped dozens of organizations implement robust ETL processing. My approach emphasizes pragmatic implementations that deliver business value while effectively managing risk.