#034 - dbt Didn't Kill ETL

It just changed the game. Here's when to stick with the classic playbook.

Hey there,

Most data leaders believe dbt can automatically resolve their ETL issues. However, 40% of dbt migrations fail because teams underestimate the importance of traditional ETL in certain situations.

Here's the dbt truth nobody talks about, and why your "outdated" ETL infrastructure might be saving you millions.

The ETL Graveyard: Why Traditional Tools Became Roadblocks

When I began my career in data engineering, ETL mainly meant waiting. Analysts would submit requests for new reports and wait for weeks as engineering teams built the necessary pipelines.

The problems were systemic:

Development bottlenecks: Each change required dedicated engineering resources. Even a simple report update could take 2-3 weeks.

Collaboration barriers: Business analysts were unable to access the transformation logic due to restrictions imposed by proprietary ETL languages and complicated interfaces.

Maintenance nightmares: I've observed that companies often allocate up to 60% of their data engineering resources solely to maintaining legacy ETL pipelines. For example, one client was incurring $150K yearly in Informatica licensing costs.

Change resistance: Changing one transformation often disrupted three others, leading teams to fear innovation.

How dbt Changed Everything (And Why It Worked)

dbt didn't just replace ETL; it democratised data transformation.

ELT Over ETL: Instead of traditional ETL processes, dbt transforms data directly within your modern cloud warehouse, eliminating the need for costly ETL servers.

SQL-First Approach: Your analysts can now take ownership of transformations. I've seen teams cut report delivery from weeks to hours by enabling analysts with dbt.

Engineering Best Practices: Version control, automated testing, and documentation; dbt introduced the software engineering discipline to data teams.

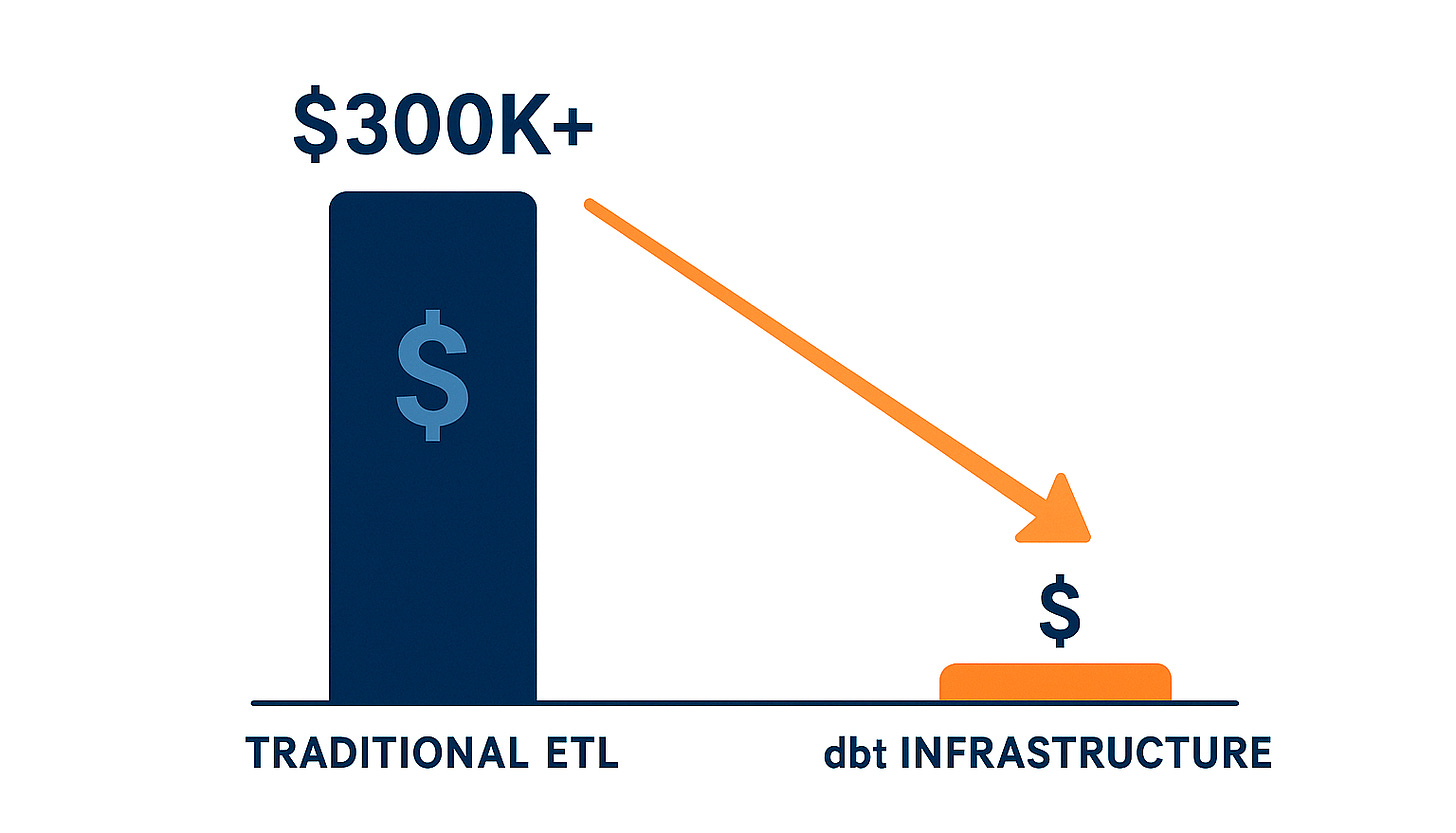

Cost Efficiency: One retail client reduced their data processing costs by 40% by switching from Talend to dbt. This change allowed them to eliminate ETL server licensing fees and lower engineering overhead.

The key transformation patterns that enhance the power of dbt:

✅ Incremental models for handling massive datasets efficiently

✅ Snapshot models for tracking historical changes

✅ Layered pipelines (staging → intermediate → marts) for scalability

✅ Reusable macros for standardising business logic

Real-World Impact: The Numbers Don't Lie

A financial services client recently shared their dbt migration results:

70% faster development cycles

50% reduction in data engineering workload

$200K annual savings on infrastructure costs

3x more analysts contributing to data pipelines

But here's the interesting part: they retained 30% of their original ETL infrastructure.

When dbt Isn't Enough: The Uncomfortable Truth

Having assisted companies with data modernisation, I can confidently say: dbt isn't always the solution.

Complex integration scenarios: If you're working with over 20 APIs, scraping web data, or managing real-time streams, you'll need orchestration tools in addition to dbt.

Extreme scale batch processing: Certain legacy ETL tools continue to outperform dbt in specific high-volume scenarios, particularly when using specialised connectors.

Mixed data types: Teams that handle unstructured data, images, or IoT sensor data often require traditional ETL processes for preprocessing before dbt can be used.

Your Migration Roadmap: The 5-Phase Approach

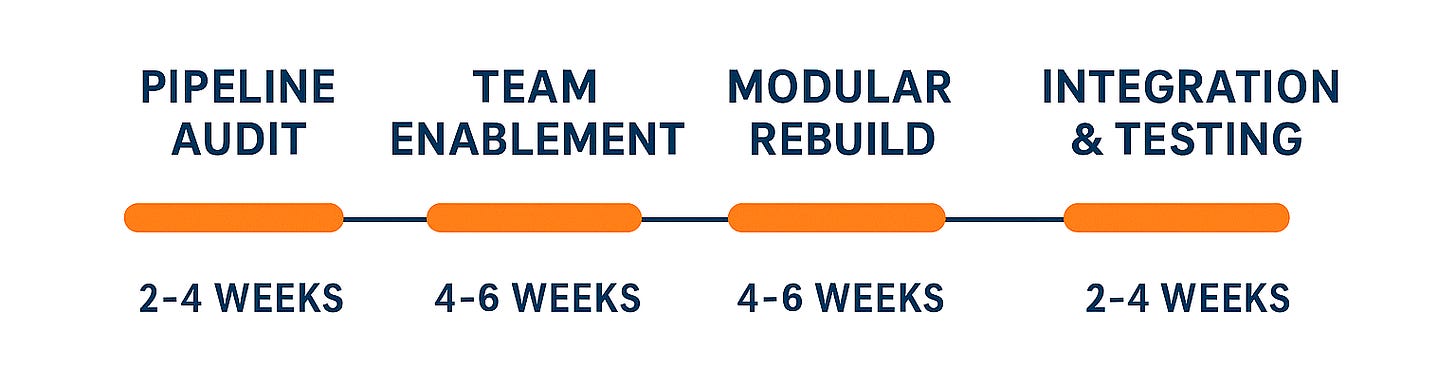

Phase 1: Pipeline Audit (2-4 weeks). Determine which transformations are suitable for migration to dbt, emphasising SQL-based logic within your warehouse.

Phase 2: Team Enablement (4-6 weeks)

Train analysts on the fundamentals of dbt, beginning with simple models to build their confidence.

Phase 3: Modular Rebuild (8-12 weeks). Follow dbt's layered methodology: start by building staging models, then develop marts.

Phase 4: Integration & Testing (6-8 weeks). Include documentation, testing, and CI/CD workflows, as this is where the true value is realised.

Phase 5: Hybrid Optimisation (4-6 weeks) Maintain ETL for complex integrations and establish clear handoff points between ETL and dbt.

The Bottom Line

dbt embodies the future of analytics transformation: modularity, accessibility, and agility. But it's not a silver bullet.

The most effective data leaders I collaborate with leverage dbt for its core strength: SQL transformations within modern data warehouses. They reserve traditional ETL for the edge cases where dbt still falls short, at least for now.

Your next step: Audit your existing pipelines. What proportion might transition to dbt without sacrificing functionality?

What's your experience with dbt migrations? Hit reply and share your biggest challenge—I read every response.

Talk soon,

Khurram

P.S. If this resonates with your experience, please share it with your colleagues who are facing similar decisions. These architectural choices are too important to guess at.

Want more like this? Hit reply and let me know what data engineering topics you want me to dive into next.