#014 - 5 Steps To Choose Between ETL/ELT With Confidence Even if You're Not a Data Architect

Stop following outdated rules in your data migration. Here's how to choose what actually works for YOUR business...

Hey there,

Welcome to this week's edition, where we tackle one of the most challenging decisions in modern data architecture: ETL vs. ELT? This is an ongoing debate among data leaders, and most organizations waste millions on the wrong data transformation approach. The debate has become more confusing than helpful.

Introduction

According to a 2023 IDC survey on Data Integration Strategies, 68% of organizations reported making ETL/ELT architectural decisions without thoroughly evaluating their specific workload needs. The same study found that these organizations were 2.5x more likely to experience budget overruns and performance issues.

The stakes are high—choosing without a proper evaluation framework often results in costly rework, extended timelines, and team frustration.

Let's cut through the noise with research-backed guidance:

A practical framework based on industry benchmarks

A simple decision tree validated by successful projects

The hidden costs that vendors don't talk about

Ready to transform your approach to data transformation?

Let’s dive in.

5 Steps To Choose Between ETL/ELT With Confidence Even if You're Not a Data Architect

To achieve a successful migration and data transformation strategy, you'll need a systematic approach based on verifiable evidence rather than following technology trends.

Based on aggregated insights from industry analysts and successful enterprise migrations, here's the structured framework that consistently delivers better outcomes:

1. Define Your Migration Goals & Constraints

Start by clearly articulating what success looks like for your data modernization project. List your objectives in order of priority:

Operational cost reduction → Favors ELT if your target platform is cloud-based with optimized pricing

Real-time analytics capability → Often better with ELT due to faster raw data availability

Enhanced data quality → May lean toward ETL for upfront cleansing and validation

Regulatory compliance → Usually better with ETL for audit trails and controlled transformations

Increased business agility → Typically favors ELT for faster iterations and explorations

Industry Insight: Financial services organizations prioritize compliance and data quality over cost reduction, often favoring ETL in regulatory-sensitive workflows. Meanwhile, retail and e-commerce businesses prioritize speed-to-insight, favoring ELT approaches for customer analytics.

Actionable Example: A financial services client created this simple prioritization matrix:

Priority 1: Compliance with GDPR and financial regulations

Priority 2: Improved data quality for customer reporting

Priority 3: Reduced operational costs

Priority 4: Faster analytics capabilities

Quick Action Item: Create a simple table with your priorities (1-5) and constraints (budget, timeline, team size) to reference during architecture discussions.

2. Map Your Data Landscape Honestly

Create an inventory of your data sources and transformation needs with these critical metrics:

Data volume per source → Flag anything over 5TB as potentially problematic for pure ELT

Data change frequency → Real-time/streaming sources may need specialized ETL pipelines

Current data quality score → Sources with <80% quality scores usually need ETL preprocessing

Transformation complexity → Count steps required for each significant transformation

Target platform limits → Document compute/storage costs and scalability boundaries

Industry Insight: Organizations processing over 10TB daily often experience significantly higher cloud computing costs when using pure ELT approaches versus hybrid ETL/ELT architectures. Telecommunications companies typically show the most dramatic difference, with pure ELT approaches costing substantially more for call detail record processing due to the high transformation complexity.

Quick Action Item: Identify your 3 largest data sources and run a 1-day sample through your ETL tool and target system to compare processing times and costs.

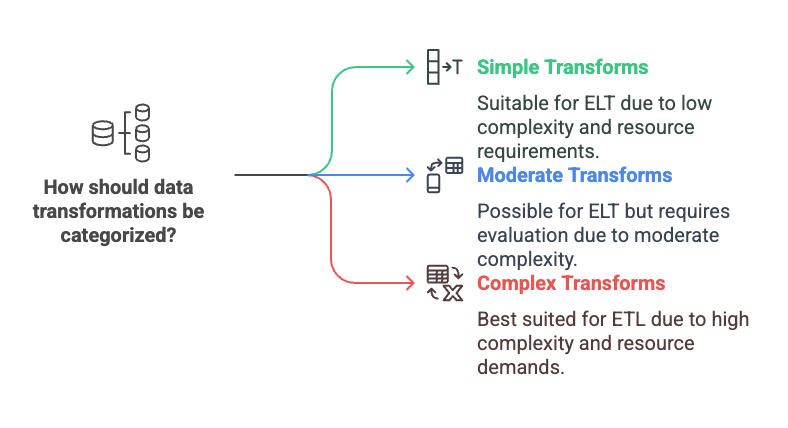

3. Evaluate Your Transformation Complexity

Catalog your transformation requirements and categorize each into these complexity tiers:

Simple transforms (ELT-friendly)

Basic filtering (WHERE clauses)

Column renaming and basic type conversion

Simple calculations (currency conversion, etc.)

Direct 1:1 mappings

Moderate transforms (ELT-possible but evaluate)

Aggregation and grouping operations

Basic joins between 2-3 tables

Window functions and time-based calculations

Data quality checks and simple validations

Complex transforms (Usually ETL-better)

Multi-step data cleansing routines

Machine learning feature engineering

Fuzzy matching or record linkage

Complex business rules with multiple dependencies

Slowly changing dimensions (Type 2+)

Industry Insight: Complex transformations in ELT pipelines frequently consume substantially more compute resources than equivalent ETL processes for datasets over 1TB. Particularly notable is that slowly changing dimension (SCD) Type 2 operations on large customer tables are significantly more expensive when performed in-database than in traditional ETL tools. However, simple and moderate transformations typically show minimal cost differences and faster development cycles in ELT implementations.

Quick Action Item: Use this complexity scale to rate your top 10 most frequently used data transformations. If more than 50% are complex, consider retaining ETL for those specific workflows.

4. Assess Your Team's Readiness

Conduct an honest skills assessment of your team across these critical dimensions:

Technical Skills Inventory

ETL tool proficiency (Informatica, SSIS, etc.)

SQL expertise level (basic, intermediate, advanced)

Cloud platform experience (AWS, Azure, GCP)

Modern data stack familiarity (dbt, Fivetran, Airflow, etc.)

Programming skills (Python, Scala, Java)

Working Style Assessment

Comfort with agile, iterative approaches (ELT-friendly)

Experience with test-driven development

Preference for visual tools vs. code-based solutions

Adaptability to new technologies and frameworks

Readiness Gap Analysis

Identify the 3 most significant skills gaps for your desired architecture

Determine training requirements vs. hiring needs

Estimate the ramp-up time for new technologies

Industry Insight: Organizations that assess team readiness before migration are much more likely to meet project timelines. Banking teams with strong traditional ETL backgrounds typically need several months to become proficient with modern ELT tools like dbt. Interestingly, teams with strong SQL skills but limited ETL experience tend to adapt to ELT approaches in just 6-8 weeks. Companies that implement structured upskilling programs generally report higher satisfaction with migration outcomes versus those that rely solely on hiring new talent.

Quick Action Item: Ask team members to self-assess their comfort level with ETL and modern ELT tools on a scale of 1-5. Use the results to identify potential champions and areas needing focused training.

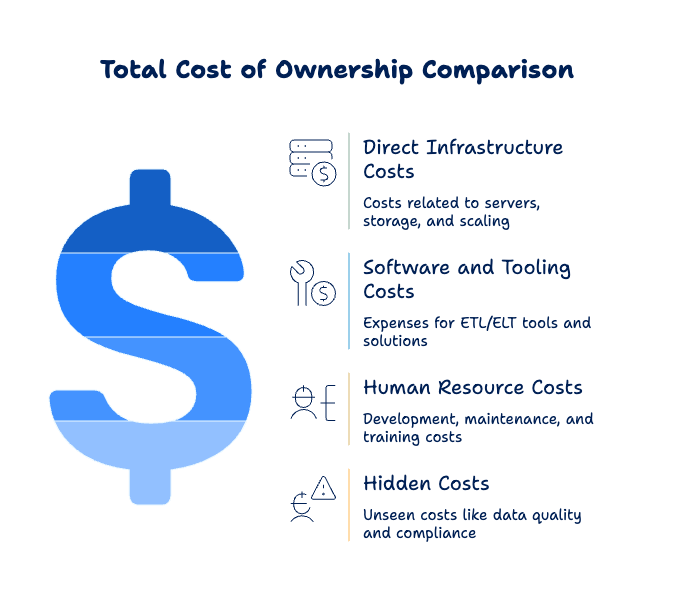

5. Calculate Total Cost of Ownership

Build a comprehensive TCO model comparing ETL vs. ELT approaches with these specific line items:

Direct Infrastructure Costs

ETL server costs (on-prem) or compute resources (Cloud)

Data storage costs (staging areas, final destinations)

Network egress chaCloudfor data movement

Scaling costs during peak processing periods

Software and Tooling Costs

ETL tool licensing (often per-core or per-server)

ELT tool subscriptions (often per-user or consumption-based)

Monitoring and observability solutions

Version control and deployment tools

Human Resource Costs

Development time (hours per pipeline × hourly rate)

Ongoing maintenance (hours per month × hourly rate)

Training and upskilling costs

Support and operations staffing

Hidden Costs

Data quality remediation costs

Business impact of delayed insights

Compliance and governance overhead

Technical debt accumulation

Industry Insight: Three three-year TCO comparisons show varied industry results for enterprises processing daily 5-15TB of data. Financial services organizations with strict compliance requirements often see better economics with ETL approaches, while retail and media companies typically benefit from ELT approaches. Notably, hybrid approaches frequently yield the lowest overall TCO across all sectors when workloads are optimally distributed based on transformation complexity.

Quick Action Item: Run a 2-week sample of your most significant data flow through both approaches and multiply the resource consumption by 26 to get an annual estimate. Add this to your licensing costs for a quick TCO comparison.

That's it.

Here's what you learned today:

The ETL vs. ELT decision isn't one-size-fits-all—it requires a systematic evaluation of your specific context

Your migration goals, data landscape, transformation complexity, team skills, and total costs all factor into the optimal approach

A hybrid strategy combining elements of both approaches is often the most practical solution.

Decision Tree Cheat Sheet

I promised you a decision tree, so here's a simplified version you can apply immediately:

1. Need Super Clean Data Before Loading (e.g., compliance)?

YES → Start with ETL for that data.

NO → Keep going.

2. Got a Super Fast, Powerful Target Platform (like the Cloud)?

YES → ELT could be a great fit!

NO → Think more about ETL or a mix.

3. Do you Let Your Data Team Explore and play with the Data Easily?

YES → ELT helps with that!

NO → Either way works.

4. Is Your Team Really Good with SQL and Cloud Tools?

YES → ELT might be smoother.

NO → Stick with what you know (ETL) while learning new skills.

5. Dealing with HUGE amounts of data and really tricky transformations?

YES → Maybe use ETL for those tricky parts.

NO → Keep going!

Bottom Line:

Mostly "YES" to ELT questions? You can probably lean toward ELT!

Mostly "YES" to ETL questions? ETL might be your sweet spot.

Mix of answers? A hybrid approach (using both) is likely the best way to go!

Industry observations consistently show that organizations following a structured decision framework are much more likely to meet or exceed their data modernization ROI targets than those making technology-driven decisions. Most successful migrations implement hybrid approaches that optimize for specific workload characteristics rather than forcing all data through a single pattern.

That’s it for this week. If you found this helpful, leave a comment to let me know. ✊

PS...If you're enjoying Data Transformation Insights, please refer this edition to a colleague struggling with their modernization strategy. They'll thank you when they save thousands on their migration project.

About the Author

With 15+ years of experience implementing data integration solutions across financial services, telecommunications, retail, and government sectors, I've helped dozens of organizations implement robust ETL processing. My approach emphasizes pragmatic implementations that deliver business value while effectively managing risk.